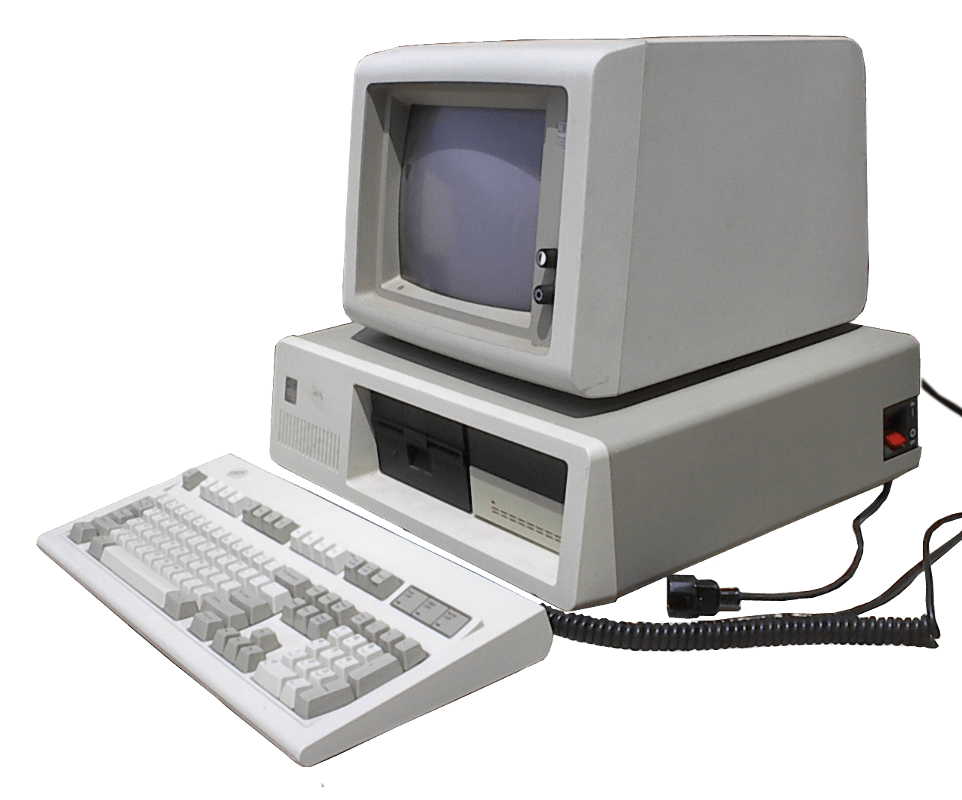

As the focus of wearable tech shifts from quantified self and smartphone notifications to content creation, radically new user interaction models are needed. In the PC paradigm, the interaction model involved an individual sitting in front of a computer terminal to enter and manipulate content via the keyboard and mouse. This model of computer-to-human interaction belied the design methodology behind Microsoft Windows operating system and Microsoft Office Suite.

In an interview with Bill Gates, he acknowledged that with wearables, the computing paradigm has to change. The content creation constructs of the PC era should not be assumed to be the right metaphors for wearable computing. For enterprise software capabilities to be successful in the wearables context, the user interaction models and metaphors need to undergo fundamental change.

Moreover, content creation has to be smarter with machine learning that is context aware and predictive, anticipating your need before the need arises. In the PC world, the machine is completely dependent upon the user to enter information into the system for it to render the information or to compute on entered values.

Wearable computing opens a new chapter of computing consciousness. It knows where you are, what you are doing, and, overtime, can predict what you want to do and what information you need at a given moment. In this regard, content creation no longer starts with the user entering the first piece of information. The integrated ecosystem of smart wearables and sensors should be able to help supply information to spur content creation, co-author content with you, and in other cases create the content for you.

The future of augmented reality smart glasses has the potential to organize information in a 3D vector, holographic interface to interact with content in brand new ways, all in the ambient air using hand gestures to enter, view, and manipulate content.

Though we are starting to see evolution of the keyboard such as Samsung’s augmented reality wearables keyboard (patent) that uses the hand itself as the keyboard, by assigning each part of your hand a different letter to touch and for content consumption on small form factors, speed-reading wearable app such asSpritz that allows you to read 250 to 1,000 words per minute (wpm), we have leaps and bounds to go before the computing metaphors for wearables are re-imagined.

New wearable input devices are on the rise. The Logbar Ring controls apps and appliances through gestures. Myo gesture control bracelet operates by detecting motion and muscle contraction to control the Google Glass display with WearScript, a JavaScript environment that runs on Google Glass that allows developers to experiment with new human-machine interface (HMI) interface concepts.

Aside from hand gesture-based interaction models, there are other input designs to consider. For instance, current voice-dictated technology, though imperfect at present, in the future, this technology will be able to detect barely audible whispers picked up through jaw bone conduction. It can transcribe much of the text that you would otherwise have to type.

Projecting several decades into the future, early brain control research experiments, such as mind-reading systems like NeuroSky’s MindWave orInteraXon’s Muse, may decipher your electrical brain waves into coherent directives to systems and devices without you having to lift a finger. Using electroencephalography (EEG) and electromyography (EMG) technology, the brain-computer interface measures the patterns of neural interaction, quantified in amplitudes and frequencies, to understand the brain’s state, such as concentration and relaxation.

Drive Greater Automation Through Artificial Intelligence

Enterprise productivity applications consume the lion’s share of IT budgets. Peel away the costly implementation and maintenance costs of complex system integrations, customized presentation layers, functionality, workflow, business logic, calculations, and data warehouse and data marts, and these beastly enterprise systems, at the end of the day, are only as robust as the data entered. Take, for instance, a CRM system. Copious data entry and upkeep by the salesforce is required for senior management to derive meaningful, actionable insight into current and forward-looking sales activities, trends, and metrics. Salespeople, by the nature of their function, generate the greatest sales lift when they are engaged in belly to-belly, revenue-driving activities. Unfortunately, CRMs, much like other ERP systems, are massive data entry platforms. Though SAP, Oracle, Microsoft, Salesforce.com, and other major ERP companies may disagree, these are outdated models where the value of the system is highly dependent upon human data input and maintenance.

The machine-to-machine ERP systems of the future must become smarter. Wearables need to understand context, connect seemingly disparate information into meaningful insights, and anticipate the user’s needs before a request. In today’s CRM world, after a prospect/client meeting, a salesperson would need to manually type his or her call report into the system. In some cases, the meeting notes or audio recording of the meeting is passed to an administrative staff, onshore or offshore, for data entry. In the course of updating the account, contact, and opportunity records, there may be manual input into numerous forms and data fields. To generate a report of the call report, again it requires human intervention.

An AI-based ERP system working symbiotically with smart wearables, on the other hand, could first profile the meeting participants, automatically note the attendees, and update or fetch contact information from its database and external sources, such as social media. Moreover, it would not simply transcribe spoken words into meeting notes, but it would also decipher key points versus extraneous conversations and document decision criteria, decision makers, and next steps, automatically updating opportunities and creating action items for the salesperson to review. Computer vision data relayed to the ERP machine learning system would evaluate participants’ facial gestures and body cues for non-verbal buy signals and congruency between what was said and how they felt.

When working on a multinational account, there could be multiple opportunities scattered across global geographies, covered by different regional sales reps in your organization. The information that your field office has is likely to be limited and localized. Stitching localized information together across your worldwide sales regions in order to have better insight into a prospect’s business strategy and enterprise initiatives is difficult, at best, to piece together. An intelligent ERP system could cull through the local office information on a particular prospect to come up with inductive insights that could shed light on the bigger opportunity at hand that the request for proposal (RFP) does not explicitly state. Being able to connect the dots in order to understand the strategic drivers would give your sales a leg up in the competition.

Some Firms Will Be Left in the Dust

Enterprise software players will be challenged to deviate from their DNA. To innovate new-to-the-world models of how users can interact in the wearable context, they will need to drive intuitive gestural, eye-tracking, and mind-based means of capturing information and then present that information in a meaningful way to increase worker productivity.

Multimedia and creativity software solutions providers such as Adobe, Autodesk, and PTC, with their 3D vector-based design solutions for architecture, engineering, construction, manufacturing, media, and entertainment industries, may fair better since the transition to a gestural computing model would be more intuitive for in-the-air ambient, holographic manipulation.

In the next decade, expect the creation of brand new household names that will overshadow the pioneers of the PC industry and desktop-based Internet from the 1980s and 1990s.

Smart wearables will evolve computing in ways that most of us can’t begin to imagine. One thing is for sure: PC-based interaction models for content creation are not suitable for wearables. Before entrepreneurs look to port enterprise productivity capabilities to the ambient air, it’s imperative that they don’t default to the virtual augmented keyboard and graphical user interface of yesterday.

Originally published on Wired on April 3, 2014. Author Scott Amyx.